Table of Contents

Welcome to our comprehensive guide on cluster analysis. In this article, we will explore the significance of clusters and the diverse applications of cluster analysis in various fields. Whether you are a marketer, biologist, finance professional, or social scientist, understanding cluster analysis is essential for uncovering patterns and relationships within your data.

Cluster analysis plays a crucial role in identifying genetic markers associated with specific diseases, detecting anomalies in financial transactions, classifying social media users, and segmenting customers based on their buying behaviors in market research. By grouping similar objects or data points together, cluster analysis helps in revealing insights that contribute to informed decision-making and problem-solving.

In the following sections, we will delve deeper into the definition and purpose of cluster analysis, explore different types of cluster analysis techniques, discuss data preparation strategies, learn how to determine the optimal number of clusters, interpret and visualize cluster analysis results, understand common mistakes and disadvantages, and discover best practices for successful cluster analysis.

Whether you are new to cluster analysis or seeking to enhance your understanding of this valuable technique, this guide will provide you with the knowledge and resources needed to leverage the power of clusters for your own analysis and research.

Introduction to Cluster Analysis

Cluster analysis is a statistical technique that groups a set of objects or data points into clusters based on their similarity. It plays a crucial role in exploring data patterns and structures, providing valuable insights into underlying relationships and associations within a dataset.

The purpose of cluster analysis is to identify meaningful clusters or subgroups in the data, which can be useful for various applications such as customer segmentation, image recognition, anomaly detection, and more. By grouping similar objects together, cluster analysis helps in organizing and making sense of complex datasets, enabling researchers, businesses, and organizations to extract relevant information and derive actionable insights.

Cluster analysis forms the foundation for many other data analysis techniques and machine learning algorithms. It serves as a starting point for understanding the inherent structure and characteristics of the data, which can then be leveraged for further analysis and decision-making processes. With its ability to uncover hidden patterns and relationships, cluster analysis has become an indispensable tool in fields such as marketing, finance, biology, social sciences, and beyond.

Example Applications of Cluster Analysis:

- Market Research: Identifying distinct customer segments based on their purchasing behavior, demographics, and preferences.

- Healthcare: Grouping patients based on their genetic markers or medical history to personalize treatment plans or detect disease clusters.

- Image Segmentation: Separating objects in an image based on their similarities in color, texture, or shape.

- Fraud Detection: Detecting abnormal patterns in financial transactions by clustering similar behaviors.

By understanding the definition and purpose of cluster analysis, we can see its significance in uncovering hidden patterns and unlocking valuable insights within complex datasets. In the next section, we will explore the different types of cluster analysis techniques and their applications.

Types of Cluster Analysis

Cluster analysis encompasses various techniques, each with its own approach and purpose. In this section, we will explore the different types of cluster analysis methods, including hierarchical clustering, k-means clustering, model-based clustering, density-based clustering, and fuzzy clustering.

Hierarchical Clustering

Hierarchical clustering constructs a hierarchy of clusters by iteratively merging or splitting groups based on their similarities. This method is useful for visualizing the relationships between clusters and identifying subclusters within larger groups. It can be agglomerative, starting with individual data points and progressively merging them, or divisive, beginning with a single cluster and recursively dividing it.

K-means Clustering

K-means clustering is a partitioning method that groups data points into K clusters, where K is a predefined number. It minimizes the sum of squared distances between each data point and the centroid of its assigned cluster. K-means clustering is efficient and widely used for its simplicity, making it suitable for large datasets.

Model-based Clustering

Model-based clustering assumes that the data points follow a particular probability distribution. It estimates the parameters of these distributions to assign data points to clusters. This method is applicable when the data distribution is complex or does not adhere to traditional distance-based measures.

Density-based Clustering

Density-based clustering determines clusters based on the density of data points within a defined neighborhood. It is effective for identifying clusters with irregular shapes and varying densities. Density-based clustering algorithms, such as DBSCAN (Density-Based Spatial Clustering of Applications with Noise), group data points into core points, border points, and noise points.

Fuzzy Clustering

Fuzzy clustering assigns membership scores to each data point, indicating the degree to which it belongs to different clusters. It allows for overlapping clusters, providing a more flexible and nuanced approach. Fuzzy clustering is suitable when there is ambiguity or uncertainty in assigning data points to distinct clusters.

Each type of cluster analysis has its strengths and is applicable in different scenarios. The choice of clustering method depends on the nature of the data, the desired level of granularity, and the specific objectives of the analysis. It is important to consider the characteristics of the dataset and the goals of the analysis when selecting an appropriate clustering algorithm.

Cluster analysis encompasses various techniques, each with its own approach and purpose. In this section, we will explore the different types of cluster analysis methods, including hierarchical clustering, k-means clustering, model-based clustering, density-based clustering, and fuzzy clustering.

Data Preparation for Cluster Analysis

Before performing cluster analysis, it is crucial to prepare the data appropriately. This involves several important steps, including data cleaning and transformation, handling missing values, scaling and normalization, and feature selection.

Data Cleaning and Transformation

Data cleaning and transformation are necessary to ensure the accuracy and reliability of the dataset. Missing values, outliers, and duplicates should be identified and addressed. Removing outliers helps in reducing the impact of extreme values on the clustering process. Duplicates, on the other hand, can introduce bias and redundancy in the data. By cleaning the data, you can ensure that it is free from these unwanted elements.

Handling Missing Values

Missing values are a common occurrence in datasets and need to be handled appropriately. These missing values can be due to various reasons such as data entry errors or incomplete data collection. Ignoring missing values can lead to biased clustering results. There are different methods to handle missing values, such as complete case analysis or imputation. Complete case analysis involves removing data points with missing values, while imputation involves filling in the missing values with estimated or interpolated values.

Scaling and Normalization

Scaling and normalization are important for cluster analysis to ensure that variables with different scales do not dominate the clustering process. Scaling or standardizing the variables helps in giving them equal importance. This is achieved by transforming the variables to have zero mean and unit variance. Normalization, on the other hand, scales the values of the variables to a specified range, often between 0 and 1. Scaling and normalization enable variables to have similar ranges and prevent biases in the clustering results.

Feature Selection

In some cases, the original dataset may contain a large number of features or variables, which can introduce noise and unnecessary complexity in the clustering process. Feature selection techniques help in identifying the most relevant and informative features for clustering. By reducing the number of features, noise can be minimized, and clustering results can be improved. Various feature selection methods, such as principal component analysis (PCA) or recursive feature elimination, can be applied to identify the most important features for clustering.

Overall, data preparation is an essential step in cluster analysis. By performing data cleaning and transformation, handling missing values, scaling and normalization, and feature selection, you can ensure that the dataset is suitable for clustering. This enhances the quality and accuracy of the clustering results, enabling meaningful insights and interpretations.

Choosing the Right Number of Clusters

Determining the right number of clusters is crucial in cluster analysis. It allows us to gain meaningful insights and make informed decisions based on the patterns and structures within the data. To make this determination, several methods can be employed, including the elbow method, silhouette analysis, and cluster evaluation.

The elbow method: This method is a graphical technique that helps us identify the optimal number of clusters by analyzing the amount of variance explained as we increase the number of clusters. The elbow method looks for the point of inflection in the plot where the addition of more clusters doesn’t significantly reduce the sum of squared distances within each cluster. This point represents the optimal balance between cluster complexity and explanatory power. Let’s take a look at an example:

In the plot above, the x-axis represents the number of clusters, and the y-axis represents the sum of squared distances. The elbow method suggests that the optimal number of clusters is at the “elbow” of the graph, which is the point where the incremental gain of adding more clusters diminishes.

Silhouette analysis: This method measures how well individual data points fit within their assigned clusters. It calculates a silhouette coefficient for each data point, which ranges from -1 to 1. A coefficient close to 1 indicates that the data point is well-matched to its cluster, while a coefficient close to -1 suggests that the data point may be better suited to a different cluster. By evaluating the average silhouette coefficient across all data points, we can determine the overall quality of the clustering solution.

Cluster evaluation is another approach used to assess the quality of clustering results. It involves comparing the obtained clusters against some form of ground truth or expert knowledge. Several evaluation metrics can be employed, such as the Rand Index, Adjusted Rand Index, or Fowlkes-Mallows Index. By quantifying the agreement between the obtained clusters and the expected clusters, we can gauge the accuracy and validity of the clustering solution.

When applying cluster analysis, it’s essential to choose the right number of clusters to ensure meaningful insights and actionable outcomes. The elbow method, silhouette analysis, and cluster evaluation provide valuable tools to make this determination. By utilizing these methods, analysts and researchers can uncover hidden patterns and structures in their data, leading to more informed decision-making and improved understanding of complex phenomena.

Interpreting and Visualizing Cluster Analysis Results

Cluster analysis results can be interpreted and visualized using various principles and techniques. These include:

Cluster Centrality

Cluster centrality defines clusters based on central points or centroids. It identifies the most representative data points within a cluster, providing insight into the characteristics and attributes that define the cluster. This concept helps in understanding the cluster’s central tendency and its relationship to other clusters.

Objective Function

The objective function quantifies the quality of a cluster analysis. It measures how well the algorithm has achieved its clustering goals, such as minimizing intra-cluster distances and maximizing inter-cluster distances. By evaluating the objective function, analysts can assess the effectiveness and accuracy of the cluster analysis results.

Cluster Separation

Cluster separation aims to create distinct and well-separated clusters. It focuses on maximizing the dissimilarity between different clusters to ensure that each cluster represents a unique pattern or group. This principle helps in identifying meaningful boundaries and differences between clusters, enhancing the interpretability of the results.

Cluster Hierarchy

Cluster hierarchy provides insights into the relationships between clusters. It represents the hierarchical structure of the clusters, showing how they are nested or grouped at different levels. This visual representation allows analysts to understand the similarities and dissimilarities between clusters, facilitating a deeper understanding of the underlying data patterns.

Unsupervised Learning

Cluster analysis is an unsupervised learning technique, meaning it does not rely on predefined labels or class information. Instead, it identifies inherent patterns and structures within the data based solely on the similarities between data points. This characteristic makes cluster analysis a valuable tool for exploratory data analysis and discovering hidden insights.

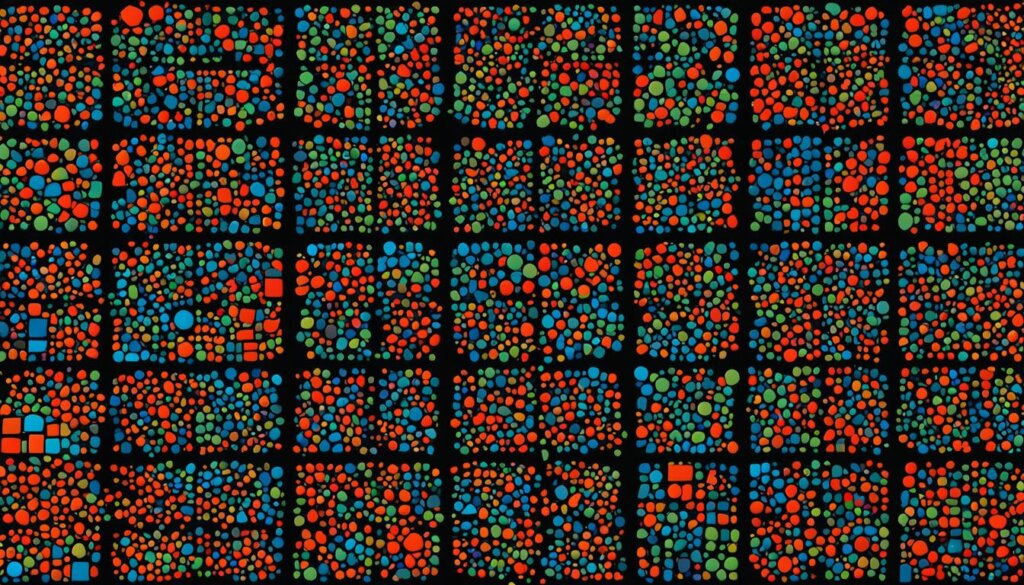

To gain a better understanding of these principles and techniques, let’s take a look at a visual representation of cluster analysis results:

In the image above, each color represents a different cluster, while the dots represent data points. The visual representation showcases how cluster analysis helps identify distinct groups within the dataset, enabling a deeper understanding of the underlying patterns and relationships.

Common Mistakes and Disadvantages with Cluster Analysis

While cluster analysis is a valuable technique, it is important to be aware of its limitations and potential pitfalls. By understanding these common mistakes and disadvantages, analysts can improve their approach to cluster analysis and achieve more accurate and meaningful results.

Inappropriate Data Preparation

One common mistake in cluster analysis is inappropriate data preparation. This includes not properly cleaning and transforming the data before applying clustering algorithms. Failure to remove missing values, outliers, and duplicates can lead to biased and inaccurate results. It is important to carefully preprocess the data and ensure its quality before proceeding with cluster analysis.

Improper Selection of Distance Metrics or Clustering Algorithms

Another common mistake is the improper selection of distance metrics or clustering algorithms. Different clustering algorithms and distance metrics have different assumptions and characteristics, and choosing the wrong ones can lead to suboptimal clustering results. It is crucial to understand the strengths and limitations of various algorithms and metrics and select the most appropriate ones for the specific dataset and research objective.

Overinterpretation of Results

Overinterpretation of cluster analysis results is also a common mistake. Clusters are statistical constructs and should not be overgeneralized or given arbitrary labels without proper validation. It is important to critically evaluate and interpret the clusters in the context of the data and research question, considering other factors and variables that may influence the clustering patterns.

Disadvantages of Cluster Analysis

In addition to common mistakes, cluster analysis has inherent disadvantages that need to be considered. Some of these include:

- Sensitivity to Initial Choices: Cluster analysis can be sensitive to initial choices, such as the number of clusters or seed values. Small changes in these choices can result in different clustering outcomes, making it important to carefully consider and validate the results.

- Need for Determining the Number of Clusters: Determining the optimal number of clusters can be challenging and subjective. While there are techniques such as the elbow method and silhouette analysis to help in this process, it requires careful consideration and domain expertise.

- Difficulty in Evaluating Clustering Performance without Ground Truth: Unlike supervised learning algorithms, cluster analysis does not have predefined labels or class information to evaluate its performance. This makes it difficult to objectively assess the quality of clustering results, especially in the absence of ground truth.

Despite these limitations and potential pitfalls, cluster analysis remains a valuable tool for exploring patterns and relationships in datasets. By understanding these common mistakes and disadvantages, analysts can make informed decisions and maximize the effectiveness of cluster analysis in their research and problem-solving endeavors.

Best Practices for Cluster Analysis

When conducting cluster analysis, it is essential to follow best practices to ensure accurate and meaningful results. By adhering to these practices, you can enhance the effectiveness of your analysis and derive valuable insights from your data.

Data Preparation: Properly preparing your data is crucial for reliable cluster analysis. This involves cleaning and transforming your data, handling missing values, scaling and normalizing variables, and selecting relevant features. By addressing these aspects, you can minimize the impact of noise and improve the quality of your clusters.

Algorithm Selection: Choosing the appropriate clustering algorithm is another key consideration. Different algorithms have different assumptions and characteristics, so it’s important to select the one that best suits your data and objectives. Popular algorithms include hierarchical clustering, k-means clustering, and model-based clustering.

Validation and Context: Validating the results of your cluster analysis is essential to ensure their accuracy and reliability. This can be achieved through various validation techniques, such as evaluating cluster cohesion and separation. Additionally, considering the context of your analysis is important, as it allows you to interpret your clusters in a meaningful way and align them with your specific goals and objectives.

To support your cluster analysis efforts, there are numerous tools and resources available. Software packages like R, Python, and MATLAB offer powerful libraries for performing cluster analysis. Online tutorials, forums, and academic research papers also provide valuable insights and guidance. By leveraging these tools and staying updated with the latest advancements in cluster analysis, you can enhance your skills and explore new avenues for application.

FAQ

What is cluster analysis?

Cluster analysis is a statistical technique that groups a set of objects or data points into clusters based on their similarity. It helps reveal patterns and structures within a dataset, providing insights into underlying relationships and associations.

What are the types of cluster analysis?

There are several types of cluster analysis, including hierarchical clustering, k-means clustering, model-based clustering, density-based clustering, and fuzzy clustering.

How should data be prepared for cluster analysis?

Data preparation for cluster analysis involves cleaning and transforming the data, which includes removing missing values, outliers, and duplicates. Scaling and normalization are important to ensure variables have similar ranges. Handling missing values can be done through various methods such as complete case analysis or imputation. Feature selection may be necessary to reduce noise and improve clustering results.

How can the right number of clusters be determined?

The right number of clusters can be determined through methods like the elbow method and silhouette analysis. The elbow method identifies the point of inflection where increasing the number of clusters does not significantly reduce the sum of squared distances. Silhouette analysis measures how well data points lie within their assigned clusters.

How can cluster analysis results be interpreted and visualized?

Cluster analysis results can be interpreted and visualized through principles and techniques such as cluster centrality, objective functions, cluster separation, cluster hierarchy, and the unsupervised nature of cluster analysis.

What are the common mistakes and disadvantages of cluster analysis?

Common mistakes in cluster analysis include inappropriate data preparation, improper selection of distance metrics or clustering algorithms, and overinterpretation of results. Some disadvantages of cluster analysis include sensitivity to initial choices, the need for determining the number of clusters, and the difficulty in evaluating clustering performance without ground truth.

What are the best practices for cluster analysis?

Best practices for cluster analysis include proper data preparation, selecting appropriate clustering algorithms, validating clustering results, considering the context of the analysis, and keeping up with the latest research and advancements in the field. Various tools and resources are available for cluster analysis, such as software packages, libraries, and online tutorials.