Table of Contents

From the colossal, room-sized machines of yesteryear to the pocket-sized supercomputers we carry today, the journey of the computer processor is a fascinating saga of relentless innovation. The evolution of computer processors is not just a story of shrinking components and increasing speeds; it’s a testament to human ingenuity, pushing the boundaries of what’s possible and fundamentally reshaping our world. Understanding this incredible progression is key to appreciating the technological marvels that power everything from our smartphones to advanced AI systems in 2025. This article delves deep into the historical milestones, architectural shifts, and visionary minds that have sculpted the very “brain” of modern computing.

Key Takeaways

- The journey of computer processors began with mechanical calculators and vacuum tube behemoths, laying the groundwork for digital computation.

- The invention of the transistor and integrated circuit revolutionized processor design, enabling miniaturization and significant performance gains.

- Major architectural shifts, such as the move from CISC to RISC and the advent of multi-core processors, have dramatically impacted processing power and efficiency.

- The industry continues to innovate with specialized processors (GPUs, NPUs) and new materials, addressing future challenges like AI and quantum computing.

- Moore’s Law, while facing physical limits, has been a guiding principle, driving consistent exponential growth in processor capabilities for decades.

The Genesis: Early Computing Devices and the Dawn of Digital

Before the silicon chip, computing was a mechanical affair. The seeds of modern processors were sown in the minds of pioneers like Charles Babbage and Ada Lovelace, who envisioned analytical engines capable of complex calculations.

From Mechanical Calculators to Electro-Mechanical Giants

The 17th century saw the invention of rudimentary mechanical calculators by Wilhelm Schickard and Blaise Pascal. Fast forward to the 19th century, and Charles Babbage conceptualized the Difference Engine and later the Analytical Engine, considered the precursor to the modern computer. While never fully built in his lifetime, Babbage’s designs introduced concepts like sequential control, branching, and looping – fundamental elements of any processor.

The early 20th century brought electro-mechanical computers, using relays and switches. Machines like the Zuse Z3 (1941) and the Harvard Mark I (1944) were marvels of engineering, but slow, prone to mechanical failure, and consumed vast amounts of space and power. They processed information using binary logic, laying the crucial foundation for all subsequent digital computation.

The Vacuum Tube Era: ENIAC and UNIVAC

The true birth of electronic computation came with the vacuum tube. These glass envelopes, housing electrodes, could rapidly switch electronic signals, offering speeds far beyond mechanical relays.

- ENIAC (Electronic Numerical Integrator and Computer): Completed in 1946, ENIAC was the first general-purpose electronic digital computer. It weighed 30 tons, occupied 1,800 square feet, and contained over 17,000 vacuum tubes. It could perform 5,000 additions per second, an astonishing feat for its time. However, a single tube failure could bring the entire machine down, and its power consumption was immense.

- UNIVAC I (Universal Automatic Computer): Introduced in 1951, UNIVAC I was the first commercial computer produced in the United States. It famously predicted the outcome of the 1952 presidential election, bringing computers into public consciousness.

These early machines were programmable, albeit through laborious manual rewiring or punch cards, and demonstrated the immense potential of electronic processing. They marked the initial, groundbreaking steps in the evolution of computer processors.

The Transistor Revolution: Miniaturization and Power

The limitations of vacuum tubes – their size, heat, power consumption, and fragility – were evident. A breakthrough was desperately needed, and it arrived in the form of the transistor.

The Invention of the Transistor (1947)

In 1947, John Bardeen, Walter Brattain, and William Shockley at Bell Labs invented the point-contact transistor. This tiny semiconductor device could amplify or switch electronic signals, performing the same function as a vacuum tube but with distinct advantages:

- Size: Significantly smaller.

- Power: Consumed far less power.

- Heat: Generated much less heat.

- Reliability: Far more durable and reliable.

The transistor quickly replaced vacuum tubes in radios, televisions, and, crucially, computers. Its invention earned the trio the Nobel Prize in Physics in 1956 and ushered in the second generation of computers.

The Integrated Circuit (IC): Paving the Way for Microprocessors

While transistors were a massive improvement, early computers still involved wiring hundreds or thousands of individual transistors together. This was complex, costly, and prone to errors. The next major leap was the integrated circuit (IC), independently invented by Jack Kilby at Texas Instruments (1958) and Robert Noyce at Fairchild Semiconductor (1959).

An IC combined multiple transistors, resistors, and capacitors onto a single piece of semiconductor material, typically silicon. This meant:

- Further Miniaturization: Entire circuits could be etched onto a tiny chip.

- Increased Reliability: Fewer discrete components meant fewer soldering points and less chance of failure.

- Reduced Manufacturing Cost: Mass production became more feasible.

The IC was the fundamental building block that made microprocessors possible. Without it, the modern era of computing would simply not exist. This period truly accelerated the evolution of computer processors.

The Birth of the Microprocessor: Intel and Beyond

The 1970s marked the watershed moment: the creation of the microprocessor, a complete central processing unit (CPU) on a single integrated circuit.

Intel 4004 (1971): The First Commercial Microprocessor

In 1971, Intel, led by Federico Faggin, Marcian Hoff, and Stanley Mazor, released the Intel 4004. Designed initially for a Japanese calculator company (Busicom), it was a 4-bit CPU containing 2,300 transistors and could execute 92,000 instructions per second (IPS) at a clock speed of 740 kHz.

The 4004 was monumental because it demonstrated that a complex computational brain could reside on a single chip. It kickstarted the microprocessor industry and laid the foundation for all subsequent personal computing. It was a true revolution in the evolution of computer processors.

The 8-bit Era: Intel 8080 and Motorola 6800

Following the 4004, the microprocessor quickly evolved.

- Intel 8080 (1974): An 8-bit processor, the 8080 was significantly more powerful and became the brain of many early personal computers, including the Altair 8800, which is often credited with sparking the personal computer revolution. It had 6,000 transistors and could address 64KB of memory.

- Motorola 6800 (1974): Motorola’s entry into the 8-bit market offered strong competition. These processors enabled more complex software and broader applications, moving computers out of labs and into businesses and homes.

The 16-bit and 32-bit Processors: IBM PC and Apple Macintosh

The late 1970s and early 1980s saw the shift to 16-bit and then 32-bit architectures, allowing processors to handle larger chunks of data and memory, leading to more sophisticated operating systems and applications.

- Intel 8086/8088 (1978/1979): The 8088, a cost-reduced version of the 8086 with an 8-bit external data bus, was chosen by IBM for its revolutionary IBM Personal Computer in 1981. This decision cemented Intel’s x86 architecture as a dominant force in the PC market for decades.

- Motorola 68000 (1979): A 16/32-bit hybrid, the 68000 powered iconic machines like the original Apple Macintosh, Amiga, and Atari ST. Its elegant design and powerful instruction set were favored by engineers and led to some of the most innovative systems of the era.

This period saw processors transition from niche components to the core of widely accessible personal computing, dramatically changing how people interacted with technology.

Architectural Innovations: CISC, RISC, and Beyond

As processors became more powerful, engineers began to experiment with different design philosophies to maximize performance and efficiency.

CISC vs. RISC: Two Philosophies of Instruction Sets

The debate between Complex Instruction Set Computing (CISC) and Reduced Instruction Set Computing (RISC) architectures was central to processor design for decades.

- CISC (Complex Instruction Set Computing): Processors like Intel’s x86 family are CISC. They feature a large, complex set of instructions, where a single instruction can perform multiple operations (e.g., loading from memory, performing an arithmetic operation, and storing the result). This reduces the number of instructions needed for a task but makes the processor hardware more complex to design and execute.

- RISC (Reduced Instruction Set Computing): Processors like those designed by ARM or MIPS are RISC. They have a smaller, simpler set of instructions, each performing only one basic operation. This makes the hardware simpler, faster to design, and allows for more efficient pipelining and execution. However, a single complex task might require more individual instructions.

Originally, CISC dominated the PC market due to its backward compatibility and early lead. However, RISC designs, particularly ARM, gained significant traction in embedded systems and mobile devices due to their power efficiency and smaller die size. Today, many modern processors use a hybrid approach, where CISC instructions are internally translated into simpler micro-operations for execution on a RISC-like core. The evolution of computer processors has been significantly shaped by this architectural interplay. For a deeper dive into modern processor architectures, consider exploring how ARM architecture is influencing modern computing.

The Rise of Pipelining and Superscalar Architectures

To further boost performance without increasing clock speed indefinitely, engineers developed techniques like pipelining and superscalar execution.

- Pipelining: Breaks down the execution of an instruction into several stages (fetch, decode, execute, write-back), allowing multiple instructions to be in different stages of execution simultaneously, much like an assembly line. This increases throughput.

- Superscalar Architectures: Implement multiple execution units within a single processor core, allowing the CPU to execute more than one instruction per clock cycle. This parallel processing at the instruction level significantly enhances performance.

These innovations allowed processors to achieve much higher effective instruction rates, even as fundamental clock speeds began to hit physical limits.

Moore’s Law and Its Impact

No discussion of processor evolution is complete without acknowledging Moore’s Law.

The Observation and Its Prophecy

In 1965, Gordon Moore, co-founder of Intel, observed that the number of transistors on an integrated circuit roughly doubled every two years. This observation, later dubbed Moore’s Law, became a self-fulfilling prophecy and a driving force in the semiconductor industry.

Driving Miniaturization and Performance

For decades, Moore’s Law accurately predicted the exponential growth in transistor density, leading to:

- Increased Performance: More transistors meant more complex circuits, more cache, and more execution units.

- Reduced Cost: As more transistors could be packed onto a single chip, the cost per transistor decreased dramatically.

- Lower Power Consumption: Smaller transistors generally consume less power.

This relentless pace of innovation has given us the incredibly powerful and affordable computing devices we enjoy in 2025. It also fueled the innovation cycle for components like GPUs and memory.

The Limits of Moore’s Law and Future Challenges

While incredibly influential, Moore’s Law is now facing fundamental physical limits. Transistors are approaching atomic scales, where quantum effects become significant, and the laws of physics impose constraints on how small and how fast they can get without generating excessive heat.

Future challenges include:

- Heat Dissipation: Packing more transistors into smaller spaces generates immense heat, which can limit performance and reliability.

- Power Efficiency: While individual transistors are more efficient, the sheer number of them leads to high overall power consumption in high-performance processors.

- Manufacturing Costs: The cost of building state-of-the-art fabrication plants (fabs) is skyrocketing, making it harder for new companies to enter the market.

These challenges are pushing the industry towards new avenues of innovation beyond simple transistor scaling.

The Multi-Core Era: Parallel Processing for the Masses

As single-core processor speeds plateaued due to power and heat limits, the industry shifted its focus to parallel processing.

The Advent of Dual-Core and Quad-Core Processors

Around the mid-2000s, chip manufacturers like Intel and AMD realized that simply increasing clock speeds was no longer sustainable. Instead, they began integrating multiple independent processing units (cores) onto a single chip.

- Dual-Core Processors (e.g., Intel Core Duo, AMD Athlon X2): Introduced in 2005, these chips contained two complete CPU cores, allowing them to execute two instruction threads simultaneously.

- Quad-Core Processors (e.g., Intel Core 2 Quad, AMD Phenom): Soon followed, offering four cores and even greater parallel processing capabilities.

This was a significant paradigm shift. Software developers had to adapt their applications to be “multi-threaded” to take full advantage of these new architectures. Operating systems also evolved to efficiently distribute tasks across multiple cores. This marked a new phase in the evolution of computer processors, focusing on horizontal scaling.

Many-Core Processors and Heterogeneous Computing

Today, multi-core processors are ubiquitous, ranging from a few cores in smartphones to dozens or even hundreds in high-performance computing (HPC) servers.

- Many-Core Processors: Processors with a large number of cores (e.g., Intel Xeon Phi, specialized server CPUs) are designed for highly parallel workloads, common in scientific simulations, data analytics, and artificial intelligence.

- Heterogeneous Computing: This involves combining different types of processing units on a single chip or system, each optimized for specific tasks. A common example is the integration of CPU and GPU cores (APUs from AMD, Intel’s integrated graphics).

This approach allows for optimal performance and efficiency by allocating tasks to the most suitable processing element. For instance, optimizing a gaming PC often involves understanding how CPU and GPU work together.

Specialized Processors: Beyond the General-Purpose CPU

While the CPU remains the brain, many modern computational tasks benefit from specialized hardware designed for particular types of workloads.

Graphics Processing Units (GPUs)

Originally designed to accelerate graphics rendering for video games, GPUs have evolved into powerful parallel processing workhorses.

- Massively Parallel Architecture: Unlike CPUs with a few powerful cores, GPUs have hundreds or thousands of smaller, simpler cores optimized for parallel mathematical operations, especially matrix multiplications.

- Applications Beyond Graphics: This makes them incredibly effective for tasks like:

- Scientific simulations

- Cryptocurrency mining

- Machine learning and AI (training neural networks)

- Video editing and rendering

The rise of GPUs for general-purpose computing (GPGPU) has been one of the most significant developments in recent decades, particularly in the context of AI innovations shaping the trading world.

Neural Processing Units (NPUs) and AI Accelerators

With the explosion of artificial intelligence and machine learning, a new category of specialized processors has emerged: Neural Processing Units (NPUs) or AI accelerators.

- Optimized for AI Workloads: NPUs are specifically designed to accelerate operations common in neural networks, such as inference (making predictions) and sometimes training. They often feature highly parallel architectures with specialized arithmetic units for tasks like low-precision matrix multiplication.

- Applications: Found in smartphones (for on-device AI tasks like facial recognition and voice processing), autonomous vehicles, and data centers (for large-scale AI model deployment).

- Cloud AI Accelerators: Companies like Google (TPU), Amazon (Inferentia), and Microsoft are developing custom AI chips for their cloud services, offering immense computational power for AI workloads.

These specialized chips represent a critical direction for the future evolution of computer processors, enabling increasingly intelligent applications. For businesses, understanding the benefits of using AI often means leveraging these advanced processing capabilities.

Digital Signal Processors (DSPs) and FPGAs

Other specialized processors play crucial roles in specific domains:

- Digital Signal Processors (DSPs): Optimized for processing digital signals in real-time. Found in audio equipment, telecommunications, and medical imaging.

- Field-Programmable Gate Arrays (FPGAs): Reconfigurable integrated circuits that allow users to program their hardware logic. They offer flexibility and high performance for specialized tasks where custom hardware acceleration is needed.

These specialized units highlight the growing trend of tailoring silicon to specific computational demands rather than relying solely on general-purpose CPUs.

The Modern Landscape: 2025 and Beyond

In 2025, the processor landscape is incredibly diverse and dynamic, with ongoing innovation across multiple fronts.

Continued Miniaturization and Advanced Manufacturing (e.g., 3nm, 2nm)

Even as Moore’s Law slows, manufacturers continue to push the boundaries of semiconductor fabrication.

- Advanced Process Nodes: Companies like TSMC, Intel, and Samsung are investing heavily in developing smaller process nodes (e.g., 3 nanometer, 2 nanometer, and even smaller). These advancements allow for denser transistors, improved power efficiency, and slightly higher performance.

- New Materials: Research into novel materials beyond silicon, such as gallium nitride (GaN) and silicon carbide (SiC), aims to further improve transistor performance and power handling, especially in power electronics.

Chiplet Design and Heterogeneous Integration

To overcome the challenges of monolithic chip design (where an entire complex processor is built on a single piece of silicon), chiplet architectures are becoming increasingly popular.

- Chiplets: Smaller, specialized dies (chiplets) are manufactured separately and then interconnected on a single package. This allows for:

- Improved Yields: It’s easier to produce small, perfect chiplets than one large, complex chip.

- Modularity: Different chiplets (CPU cores, GPU cores, I/O, memory controllers) can be mixed and matched to create custom processors.

- Cost Efficiency: Using different process nodes for different chiplets can optimize cost and performance.

- Heterogeneous Integration: This extends the concept of chiplets to integrate diverse components (logic, memory, sensors) in a single, compact 3D package, reducing latency and improving power efficiency.

This modular approach is a key strategy for the future evolution of computer processors, especially for high-performance computing and specialized AI hardware.

The Rise of Edge Computing Processors

With the proliferation of IoT devices and the demand for real-time processing, processors designed for edge computing are gaining prominence.

- Low Power, High Efficiency: Edge processors are optimized for minimal power consumption while still offering sufficient computational power for tasks like sensor data analysis, local AI inference, and secure communication without relying solely on cloud connectivity.

- Security Features: Often include hardware-level security features to protect data and device integrity at the edge.

Quantum Computing: The Next Frontier

Looking further into the future, quantum computing represents a fundamental shift in processing paradigms.

- Qubits: Unlike classical bits that are 0 or 1, qubits can be 0, 1, or both simultaneously (superposition), and can be entangled, allowing for incredibly complex calculations.

- Solving Intractable Problems: Quantum computers promise to solve certain problems currently intractable for even the most powerful classical supercomputers, such as drug discovery, materials science, and cryptography.

- Early Stages: While still in its infancy and operating in highly controlled environments, quantum computing holds immense potential to revolutionize fields far beyond what current processors can achieve. This isn’t just an evolution; it’s a revolution in waiting. For investors, understanding quantum-enhanced risk management could become crucial.

This continued push for smaller, faster, more efficient, and specialized processors ensures that the innovation in computing will not slow down, even as the challenges grow.

| Processor Era | Key Characteristics | Notable Processors (Examples) | Impact on Computing |

|---|---|---|---|

| Pre-Electronic | Mechanical, Electro-mechanical, large, slow | Analytical Engine, Harvard Mark I | Concept of programmable computation |

| Vacuum Tube | Electronic, room-sized, high power/heat, fragile | ENIAC, UNIVAC I | First truly electronic digital computers |

| Transistor/IC | Miniaturization, lower power/heat, more reliable | First Transistor, Early ICs | Enabled smaller, more powerful, and reliable computers |

| Microprocessor (8-bit) | CPU on a single chip, early personal computing | Intel 4004, Intel 8080, Motorola 6800 | Birth of the personal computer, hobbyist computing |

| 16/32-bit PCs | More powerful, more memory, GUI OS support | Intel 8088, Motorola 68000 | Mainstream personal computing, professional workstations |

| Pipelining/Superscalar | Instruction-level parallelism | Intel Pentium, AMD K5 | Significant performance boost in single-core systems |

| Multi-Core | Multiple cores on one chip, parallel processing | Intel Core Duo, AMD Athlon X2 | Enhanced multitasking, demanding applications, gaming |

| Specialized (GPU/NPU) | Massively parallel for graphics/AI, heterogeneous | NVIDIA GPUs, Google TPUs, Apple Bionic NPUs | Revolutionized AI, scientific computing, modern gaming |

| Modern & Future (2025) | Chiplets, advanced nodes, quantum computing (R&D) | AMD Zen, Intel Meteor Lake | Continued scaling, custom silicon, preparation for post-silicon era |

-

The Astonishing Evolution of Computer Processors: From Gigantic Machines to Pocket Powerhouses in 2025

Imagine a world where a single calculation required a room full of vacuum tubes, consuming immense power and generating significant heat. This was the reality of early computing. Fast forward to 2025, and we carry devices in our pockets with processing power that would have been unimaginable just decades ago. The journey behind this transformation is the fascinating evolution of computer processors – the very “brains” that power our digital world. This article will delve into the remarkable progress of these tiny titans, exploring the key milestones, technological breakthroughs, and the future trajectory of computing power.

Key Takeaways

- The evolution of computer processors began with bulky, vacuum-tube machines, progressing through transistors and integrated circuits to today’s nano-scale wonders.

- Moore’s Law has been a primary driver, predicting the exponential growth in transistor density, leading to continuous improvements in performance and efficiency.

- Significant milestones include the invention of the transistor, the first microprocessor (Intel 4004), the rise of multi-core architectures, and the emergence of specialized processors like GPUs and NPUs.

- Modern processors are characterized by incredible miniaturization, multi-core designs, advanced cache systems, and AI acceleration capabilities, enabling complex tasks on compact devices.

- Looking ahead to 2025 and beyond, processor development focuses on sustainable computing, advanced materials, quantum computing, and further specialization for AI and parallel processing.

The Dawn of Computing: From Relays to Vacuum Tubes

Before the integrated circuit and even the transistor, computers were behemoths. The earliest forms of digital calculation used mechanical relays, slow and prone to wear. The true “dawn” of electronic computing began with vacuum tubes, which offered significantly faster switching speeds.

First Glimmers: Early Electronic Computers

- ENIAC (Electronic Numerical Integrator and Computer, 1946): Often cited as the first general-purpose electronic digital computer, ENIAC filled an entire room, weighed 30 tons, and contained over 17,000 vacuum tubes. It could perform thousands of calculations per second, a marvel at the time, but its tubes were unreliable and consumed massive amounts of power.

- EDSAC (Electronic Delay Storage Automatic Calculator, 1949): Introduced the concept of stored programs, allowing computers to be reprogrammed without physical rewiring, a monumental step in the evolution of computer processors.

“The ENIAC, though a marvel of its time, vividly illustrated the limitations of vacuum-tube technology: immense size, high power consumption, and frequent breakdowns. These challenges spurred the innovations that would define the next era of processor evolution.”The Transistor Revolution: A Paradigm Shift

The invention of the transistor at Bell Labs in 1947 by John Bardeen, Walter Brattain, and William Shockley marked a pivotal moment. Transistors were smaller, more reliable, consumed less power, and generated less heat than vacuum tubes. This invention laid the groundwork for miniaturization, a core theme in the evolution of computer processors.

The Rise of Integrated Circuits (ICs)

While transistors were revolutionary, assembling them individually was still cumbersome. The next leap came in the late 1950s with the invention of the integrated circuit (IC) by Jack Kilby (Texas Instruments) and Robert Noyce (Fairchild Semiconductor). An IC combined multiple transistors and other components onto a single semiconductor chip. This innovation led to:

- Mass production of electronic components.

- Further reduction in size and cost.

- Increased reliability and speed.

The Birth of the Microprocessor: Intel 4004 to Modern Marvels

The true “processor” as we understand it today emerged with the microprocessor. This was a CPU entirely contained on a single IC chip. Its arrival dramatically accelerated the pace of computing innovation.

Key Milestones in Processor Development

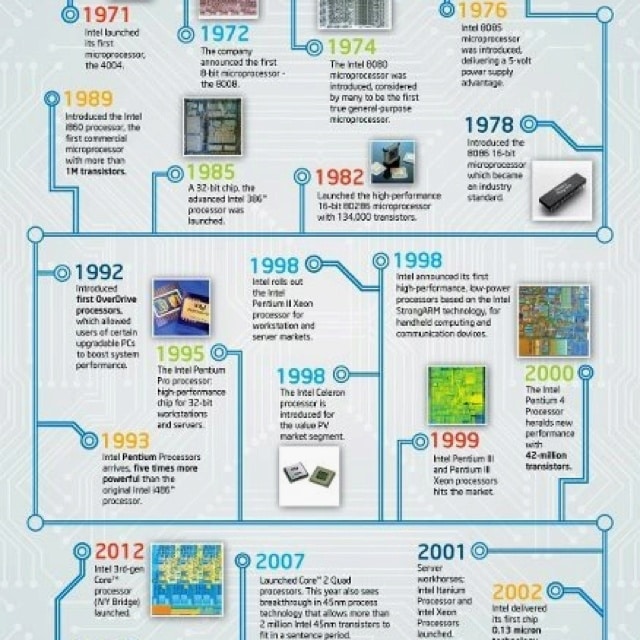

Year Processor/Event Significance 1971 Intel 4004 World’s first commercial single-chip microprocessor. Had 2,300 transistors. 1974 Intel 8080 Became the brain of the Altair 8800, one of the first personal computers. 1978 Intel 8086/8088 The foundation of the IBM PC, establishing the x86 architecture. 1985 Intel 386 First 32-bit processor, enabled multitasking in operating systems. 1993 Intel Pentium Introduced super-scalar architecture, popularizing desktop computing. 1999 AMD Athlon Challenged Intel’s dominance, pushing performance boundaries. 2000s Multi-Core Processors Shift from increasing clock speed to adding more processing cores. 2007 Apple iPhone Pushed mobile processor development into high gear. 2010s Integrated Graphics & Heterogeneous Computing CPUs with integrated GPUs, specialized accelerators (e.g., AI). 2020s Arm-based PCs & Advanced Manufacturing Apple M-series, RISC-V, 3nm/2nm process nodes. Moore’s Law: The Driving Force

Gordon Moore’s 1965 observation, later known as Moore’s Law, predicted that the number of transistors on an integrated circuit would double approximately every two years. This wasn’t a physical law but an industry roadmap that spurred innovation. It has remarkably held true for decades, leading to:

- Exponential growth in processing power.

- Decreased cost per transistor.

- Increased energy efficiency.

- Miniaturization, enabling smaller devices.

While some predict its slowdown in its traditional sense, the spirit of Moore’s Law continues to drive innovation in areas like 3D stacking and new architectures.

The Multi-Core Era and Specialized Processors

By the mid-2000s, hitting higher clock speeds became difficult due to power consumption and heat. The industry pivoted, leading to the rise of multi-core processors. Instead of one super-fast core, chips started incorporating two, four, eight, or even dozens of cores.

Diving Deeper into Modern Processor Architectures

The evolution of computer processors in the 21st century is defined by several key architectural shifts:

- Multi-core Processing: Allows a processor to handle multiple tasks simultaneously, vastly improving multitasking and performance for multi-threaded applications. This is why a modern quad-core processor (even at lower clock speeds) often outperforms an older single-core processor with a higher clock speed.

- Cache Memory: Processors rely heavily on different levels of cache (L1, L2, L3) to store frequently accessed data close to the processing cores, minimizing the slower access to main system RAM. A larger and faster cache significantly boosts performance.

- Instruction Set Architectures (ISAs):

- x86: Dominant in desktop and server markets (Intel, AMD). Complex Instruction Set Computer (CISC) design.

- ARM: Predominant in mobile devices, now making inroads into laptops and servers (Apple M-series, Qualcomm). Reduced Instruction Set Computer (RISC) design, known for power efficiency.

- RISC-V: An open-source ISA gaining traction for its flexibility and customization, promising to democratize processor design.

- Integrated Graphics Processors (IGPs): Many modern CPUs include a GPU directly on the same die. This saves space and power, making them ideal for laptops and budget desktops, though dedicated GPUs still offer superior performance for demanding graphics tasks.

The Rise of Specialized Processors

As computing needs diversified, so did processors:

- Graphics Processing Units (GPUs): Initially for rendering graphics, GPUs evolved into powerful parallel processors, essential for scientific simulations, cryptocurrency mining, and critically, Artificial Intelligence (AI) and Machine Learning (ML).

- Neural Processing Units (NPUs): Emerging in smartphones and increasingly in PCs (especially in 2025), NPUs are dedicated hardware accelerators optimized for AI workloads, such as facial recognition, natural language processing, and advanced camera features.

- Digital Signal Processors (DSPs): Used for processing audio and video signals in real-time.

- Field-Programmable Gate Arrays (FPGAs): Reconfigurable hardware that can be programmed for specific tasks, offering flexibility and performance for certain applications.

The Current State in 2025: Miniaturization and AI Integration

As we navigate 2025, the processor landscape is characterized by incredible miniaturization, pushing the boundaries of physics, and deep integration of AI capabilities.

- Advanced Manufacturing Nodes: Leading manufacturers are now producing processors on 3-nanometer (nm) and even experimenting with 2nm process nodes. This allows for billions of transistors on a chip smaller than a fingernail, leading to unprecedented performance and efficiency.

- Heterogeneous Computing: Modern systems often combine different types of processors (CPU, GPU, NPU) working together. This “heterogeneous” approach allows each task to be handled by the most efficient hardware, optimizing overall system performance and power consumption.

- AI Everywhere: Almost every new processor released in 2025, from smartphones to enterprise servers, includes some form of AI acceleration. This is crucial for running sophisticated AI models locally, enhancing privacy, and reducing latency.

- Sustainability Focus: With increasing computing power comes greater energy consumption. The industry is heavily investing in more energy-efficient architectures, advanced cooling solutions, and power management techniques to reduce environmental impact. ♻️

Future Horizons: Beyond Silicon and Quantum Leaps

What does the future hold for the evolution of computer processors? The quest for greater power, efficiency, and new capabilities continues relentlessly.

Anticipated Trends and Emerging Technologies

- Post-Silicon Materials: As silicon-based transistors approach their physical limits, research into new materials like carbon nanotubes, graphene, and 2D materials aims to create even smaller and faster transistors.

- 3D Stacking and Chiplets: Instead of monolithic chips, future processors will increasingly stack components vertically (3D stacking) or use “chiplets” – smaller, specialized chip components connected on an interposer. This allows for greater density and flexibility in design.

- Optical Computing: Using light instead of electrons for processing could offer significantly faster speeds and lower power consumption, though this technology is still in early research phases.

- Quantum Computing: A revolutionary paradigm that uses quantum-mechanical phenomena (superposition, entanglement) to solve certain problems exponentially faster than classical computers. While not for general-purpose computing yet, quantum processors are rapidly advancing for specialized tasks.

- Neuromorphic Computing: Inspired by the human brain, neuromorphic chips aim to process information in a more parallel and energy-efficient way, particularly suited for AI and machine learning tasks.

- Further Specialization: Expect to see an even greater proliferation of highly specialized accelerators for specific tasks, moving beyond just GPUs and NPUs to address niche computing challenges.

Understanding Key Processor Terminology

Transistor:A semiconductor device used to amplify or switch electronic signals and electrical power. It is the fundamental building block of modern electronic devices, including computer processors. Every ‘core’ or ‘cache’ contains billions of these tiny switches.

Clock Speed (GHz):The number of cycles per second that a processor can perform, typically measured in gigahertz (GHz). A higher clock speed generally means faster processing for individual tasks, though multi-core designs have shifted focus beyond just this metric.

Core:An individual processing unit within a CPU. Multi-core processors contain several cores, allowing them to execute multiple instructions or tasks simultaneously, enhancing multitasking and parallel processing capabilities.

Cache Memory:A small, very fast memory integrated into or near the CPU, used to store frequently accessed data and instructions to reduce the time it takes for the CPU to access them from main memory (RAM). This acts as a high-speed buffer. ⚡

Lithography (Process Node):The advanced photolithography process used to create integrated circuits, including processors, by etching intricate patterns onto a silicon wafer. The ‘nm’ (nanometer) size often refers to the feature size or process node, indicating how small and dense the transistors are on the chip, influencing performance and efficiency.

Microarchitecture:The specific internal design and implementation of a CPU’s components, including its instruction set, cache hierarchy, pipelines, and execution units. It dictates how efficiently a processor performs tasks given its core count and clock speed.

FAQ: Your Questions About Processor Evolution Answered

What is the primary function of a computer processor?The primary function of a computer processor, also known as the Central Processing Unit (CPU), is to execute instructions, perform calculations, and manage the flow of information for a computer system. It acts as the ‘brain’ of the computer, interpreting and carrying out commands from software and hardware.How has Moore’s Law influenced the evolution of computer processors?Moore’s Law, which observes that the number of transistors on a microchip doubles approximately every two years, has been a driving force behind the rapid evolution of computer processors. It has led to smaller, more powerful, and more energy-efficient CPUs, enabling continuous advancements in computing technology over decades.What are the key differences between CPUs and GPUs?CPUs (Central Processing Units) are designed for general-purpose computing, excelling at complex sequential tasks. GPUs (Graphics Processing Units) are specialized processors with thousands of smaller cores, optimized for parallel processing, making them ideal for tasks like graphics rendering, machine learning, and scientific simulations where many calculations can be performed simultaneously.What is the significance of multi-core processors?Multi-core processors contain two or more independent processing units (cores) on a single chip. This allows the CPU to handle multiple tasks or threads of a single task simultaneously, significantly improving overall performance, especially in multitasking environments and for applications designed to leverage parallel processing.What are some future trends in computer processor development?Future trends in computer processor development include continued miniaturization (e.g., beyond silicon), advancements in specialized processors (like AI accelerators and quantum processors), more heterogeneous computing architectures (combining various types of cores), and increased focus on energy efficiency and sustainable computing.How to Choose the Right Processor for Your Needs in 2025

Given the rapid evolution of computer processors, selecting the right one can be daunting. Here’s a simple guide:

Step 1: Identify Your Primary Use Case

Determine what you will primarily use the computer for. Is it for casual browsing, office work, gaming, video editing, or professional CAD/design? Different use cases demand different processor strengths. A gamer needs different specs than a student.

Step 2: Understand Core Count and Clock Speed

For general use, a quad-core processor (like an Intel Core i3 or AMD Ryzen 3) is often sufficient. For gaming, video editing, or heavy multitasking, consider 6, 8, or more cores (Intel Core i5/i7/i9, AMD Ryzen 5/7/9). Clock speed (GHz) indicates how many cycles per second a core can perform; higher is generally better for single-threaded tasks, but core count is crucial for parallel workloads.

Step 3: Consider Processor Generation and Architecture

Newer generations of processors typically offer better performance and efficiency. Look for the latest models from manufacturers like Intel (e.g., ’14th Gen’ or ’15th Gen’ in 2025) or AMD (e.g., ‘Ryzen 7000 series’ or newer). Research their architectural improvements, like hybrid core designs from Intel or AMD’s Zen architecture advancements.

Step 4: Evaluate Integrated vs. Dedicated Graphics

If you’re not planning on intense gaming or graphics work, a processor with integrated graphics (like Intel’s ‘iGPU’ or AMD’s ‘APU’) can save costs and power. For serious gaming or professional creative work (video editing, 3D rendering), a dedicated GPU is essential, and the CPU should be powerful enough not to bottleneck it. Many newer CPUs in 2025 feature very capable integrated graphics for light gaming and media consumption.

Step 5: Check Power Consumption and Cooling Requirements

High-performance processors consume more power (measured in TDP – Thermal Design Power) and generate more heat. Ensure your power supply unit (PSU) is adequate and that you have sufficient cooling solutions (e.g., air cooler or liquid cooler) for your chosen CPU to prevent thermal throttling and maintain optimal performance. Overclocking requires even more robust cooling.

🌡

️

Step 6: Read Reviews and Benchmarks

Before making a final decision, consult independent reviews and benchmark tests for the processors you are considering. These real-world performance metrics can provide invaluable insights into how a processor performs in various applications, offering a practical perspective beyond raw specifications.

Conclusion: The Unstoppable March of Progress

The evolution of computer processors is a testament to human ingenuity and the relentless pursuit of progress. From the room-sized ENIAC of the 1940s to the billions of transistors packed into a chip smaller than a coin in 2025, the journey has been nothing short of spectacular. This continuous advancement has not only reshaped computing but has also fundamentally transformed every aspect of modern life, from communication and entertainment to scientific discovery and healthcare.

As we look ahead, the challenges of physics and economics will undoubtedly lead to new paradigms. We are moving beyond simply shrinking transistors to exploring novel materials, three-dimensional architectures, and entirely new computing models like quantum and neuromorphic processors. The future promises even more specialized, efficient, and powerful “brains” for our machines, pushing the boundaries of what’s possible. The next decades will likely witness another profound shift, continuing the astonishing legacy of processor innovation. Get ready for an even faster, smarter, and more interconnected world, all powered by the ever-evolving core of the computer.

Actionable Next Steps:

- Stay Informed: Follow tech news and reputable hardware review sites to keep up with the latest processor releases and architectural advancements.

- Evaluate Your Needs: Regularly assess your computing requirements to determine if your current processor is meeting your demands, or if an upgrade would significantly enhance your productivity or enjoyment.

- Consider Energy Efficiency: When purchasing new hardware, factor in power consumption. More efficient processors not only save on electricity bills but also contribute to a greener planet.

- Explore Specialized Hardware: If your tasks involve heavy AI, graphics, or scientific computing, research dedicated GPUs or NPUs that can complement your CPU for optimal performance.